The rapid advancement of artificial intelligence presents a formidable challenge to information integrity. Synthetic media, articularly that created by sophisticated AI models, is becoming increasingly pervasive and difficult to detect.

The Proliferation of Synthetic Media

The escalating ease with which synthetic media can be produced and disseminated constitutes a significant concern. Advanced algorithms now empower individuals, regardless of their technical expertise, to generate highly realistic yet entirely fabricated content. This accessibility has led to an unprecedented proliferation of manipulated images, videos, and audio recordings, blurring the lines between authentic and artificial information. The exponential growth in the availability of these tools and techniques poses a serious threat to the integrity of the information ecosystem. The rapid spread of such content is facilitated by social media platforms, which often lack the necessary mechanisms to effectively identify and curtail the spread of synthetic media. This confluence of factors creates a fertile ground for the propagation of misinformation and the erosion of public trust in established sources of information. The implications for societal discourse and informed decision-making are profound, requiring urgent and concerted attention from researchers, policymakers, and the public alike.

Mechanisms of AI-Driven Disinformation

AI powered tools enable the creation of increasingly sophisticated and difficult to detect disinformation. These tools leverage advanced techniques that manipulate various forms of media, with malicious intentions.

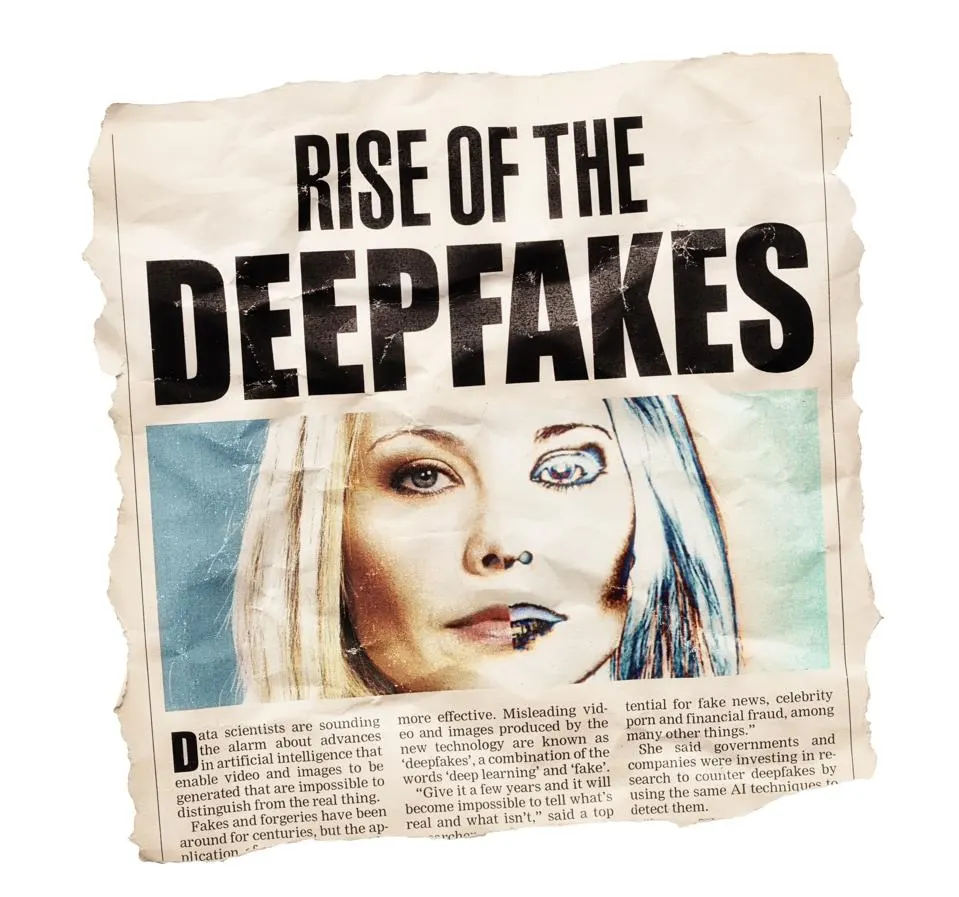

Deepfakes⁚ Visual and Auditory Manipulation

Deepfakes represent a particularly potent form of AI-driven disinformation, leveraging sophisticated machine learning algorithms to create highly convincing but entirely fabricated visual and auditory content. These techniques involve the manipulation of existing footage or audio recordings to depict individuals saying or doing things they never actually did. The resulting synthetic media can be remarkably realistic, making it exceptionally difficult for even trained observers to distinguish between genuine and fabricated content. The potential for misuse is substantial, with deepfakes capable of undermining public trust in leaders, creating reputational damage, and inciting social unrest. The rapid evolution of deepfake technology presents a considerable challenge, necessitating the development of new detection methods to mitigate the risks associated with this form of manipulated media. The ability to fabricate realistic videos and audio recordings raises serious questions about the veracity of information and the potential for malicious actors to exploit these capabilities to manipulate public opinion and undermine democratic processes.

Navigating the Future of Information Integrity

The proliferation of AI-generated disinformation poses a direct threat to the integrity of democratic institutions. The ability to create convincing falsehoods could manipulate elections and erode trust in governance.