Managing such a huge array of chips to develop the Llama 4 will likely present unique engineering challenges and require an enormous amount of energy. Meta executives on Wednesday sidestepped an analyst question about energy access restrictions in parts of the U.S. hampering companies’ efforts to develop more powerful AI.

According to one estimate, a cluster of 100,000 H100 chips would require 150 megawatts of power. The largest national laboratory supercomputer in the United States, El Capitan, by contrast, requires 30 megawatts of power. Meta expects to spend up to $40 billion in capital this year to furnish data centers and other infrastructure, an increase of more than 42 percent from 2023. The company expects even more explosive growth in that spending next year.

Meta’s total operating expenses are up about 9 percent this year. But overall sales — mostly from ads — grew more than 22 percent, leaving the company with higher margins and bigger profits even as it pours billions of dollars into Llama’s efforts.

Meanwhile, OpenAI, considered the current leader in the development of cutting-edge AI, is spending money even though it charges developers for access to its models. What remains a non-governmental venture for now has said it is training GPT-5, a successor to the model that currently powers ChatGPT. OpenAI said GPT-5 will be larger than its predecessor, but said nothing about the computer cluster it uses for training. OpenAI also said that in addition to scale, GPT-5 will include other innovations, including a newly developed reasoning approach.

CEO Sam Altman said the GPT-5 will be a “significant leap forward” over its predecessor. Last week, Altman responded to a news report stating that OpenAI’s next frontier model would be released by December, writing to X that “fake news is out of control.”

On Tuesday, Google CEO Sundar Pichai said the company’s latest version of the Gemini family of generative AI models is in development.

Meta’s open approach to AI sometimes proves controversial. Some AI experts worry that making vastly more powerful AI models freely available could be dangerous because it could help criminals launch cyberattacks or automate the design of chemical or biological weapons. Although Llama was fine-tuned before its release to limit misbehavior, removing these restrictions is relatively trivial.

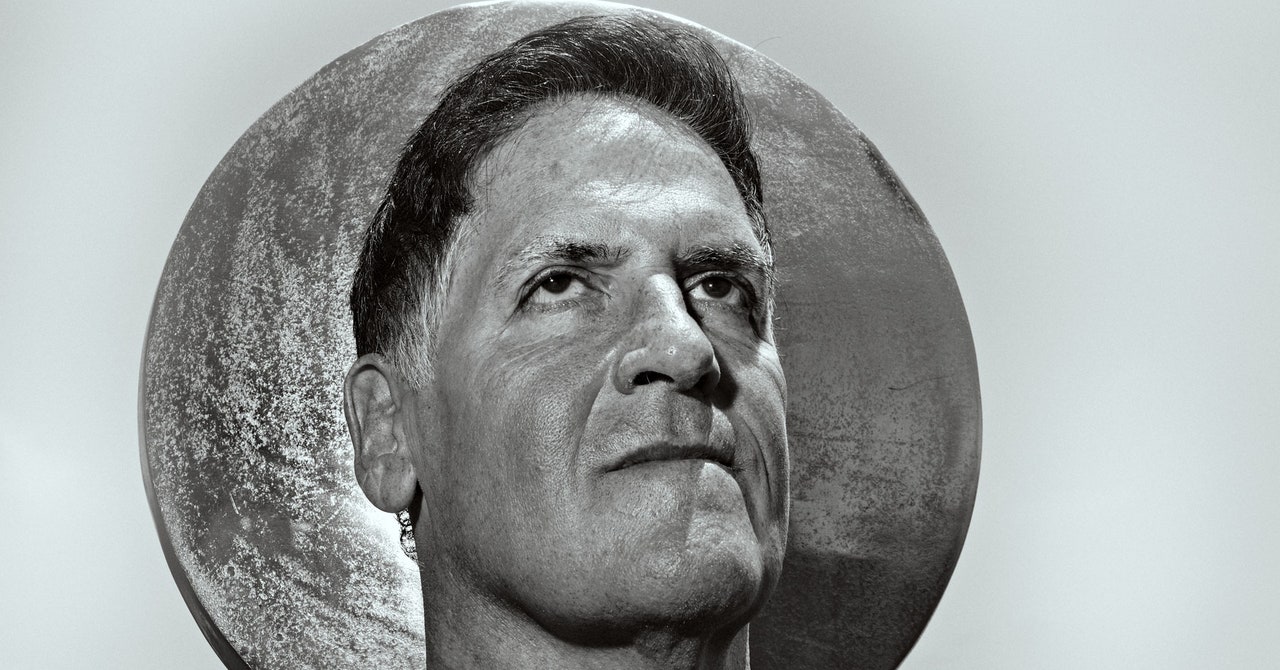

Zuckerberg remains optimistic about the open source strategy, even as Google and OpenAI push their own systems. “It seems pretty clear to me that open source is going to be the most cost-effective, adaptable, reliable, performant and easiest-to-use option available to developers,” he said Wednesday. “And I’m proud that Lama is leading this.”

Zuckerberg added that Llama 4’s new capabilities should be able to power a wider range of features in Meta’s services. Today, Llama’s pattern-based signature suggestion is a ChatGPT-like chatbot known as Meta AI, which is available on Facebook, Instagram, WhatsApp and other apps.

Over 500 million people a month use Meta AI, Zuckerberg said. Over time, Meta expects to generate revenue through in-feature advertising. “There will be an expanding set of requests that people use it for, and the monetization opportunities will be there over time as we get there,” Meta CFO Susan Lee said on the call Wednesday. With the ad revenue potential, Meta just might be able to subsidize Llama for everyone else.